Data analysis II

Webscraping, advanced

Laurent Bergé

University of Bordeaux, BxSE

09/12/2021

A large project

you have to deal with a large project, involving scraping 1000's of webpages

the pages are in the vector

all_webpagesyou've written a scraper function,

scrape_format, that scrapes a single web page and formats it appropriately

A large project

you have to deal with a large project, involving scraping 1000's of webpages

the pages are in the vector

all_webpagesyou've written a scraper function,

scrape_format, that scrapes a single web page and formats it appropriately

A large project: Code

If you wrote the following code or similar:

n = all_webpagesres = vector("list", n)for(i in 1:n){ res[[i]] = scrape_format(all_webpages[i])}A large project: Code

If you wrote the following code or similar:

n = all_webpagesres = vector("list", n)for(i in 1:n){ res[[i]] = scrape_format(all_webpages[i])}Then congratulations! 🎉

A large project: Code

If you wrote the following code or similar:

n = all_webpagesres = vector("list", n)for(i in 1:n){ res[[i]] = scrape_format(all_webpages[i])}Then congratulations! 🎉 You didn't miss any of the rookie mistakes!

What are the problems with my code?

n = all_webpagesres = vector("list", n)for(i in 1:n){ res[[i]] = scrape_format(all_webpages[i])}What are the problems with my code?

n = all_webpagesres = vector("list", n)for(i in 1:n){ res[[i]] = scrape_format(all_webpages[i])}This code breaks the three laws of webscraping.

The three laws of webscraping

The three laws of webscraping

- Thou shalt not be in a hurry

The three laws of webscraping

Thou shalt not be in a hurry

Thou shalt separate the scraping from the formatting

The three laws of webscraping

Thou shalt not be in a hurry

Thou shalt separate the scraping from the formatting

Thou shalt anticipate failure

Thou shalt not be in a hurry

you would have soon figured out this law by yourself

requests to the server are costly for the server costs run time + bandwidth

very easy to ply a server with thousands of requests per second, just write a loop

Thou shalt not be in a hurry

you would have soon figured out this law by yourself

requests to the server are costly for the server costs run time + bandwidth

very easy to ply a server with thousands of requests per second, just write a loop

Thou shalt not be in a hurry

you would have soon figured out this law by yourself

requests to the server are costly for the server costs run time + bandwidth

very easy to ply a server with thousands of requests per second, just write a loop

Law 1: What to do

always wait a few seconds between two pages requests

it's a bit of a gentleman agreement to be slow in scraping★

anyway the incentives are aligned: trespass this agreement and you will quickly feel the consequences!

Thou shalt separate the scraping from the formatting

Remember that you have 1000's of pages to scrape.

Thou shalt separate the scraping from the formatting

Remember that you have 1000's of pages to scrape.

Thou shalt separate the scraping from the formatting

Remember that you have 1000's of pages to scrape.

Typically:

- scrape a few pages

- write code to extract the relevant data from the HTML

- create a function out of it to systematize to each page

Law 2: Consequences of infringement

Law 2: Consequences of infringement

Law 2: What to do?

In a large project you cannot anticipate the format of all the pages from a mere sub-selection. So:

always save the HTML code on disk after scraping★

apply, and then debug, the formatting script only after all, or a large chunk of, the data is scraped

Law 2: What to do?

In a large project you cannot anticipate the format of all the pages from a mere sub-selection. So:

always save the HTML code on disk after scraping★

apply, and then debug, the formatting script only after all, or a large chunk of, the data is scraped

- the data always change

- you always want more stuff than what you anticipated and be sure this epiphany only happens ex post!

Thou shalt anticipate failure

Be prepared, even if it's coded properly:

Thou shalt anticipate failure

Be prepared, even if it's coded properly:

Your code will fail!

Thou shalt anticipate failure

Be prepared, even if it's coded properly:

Your code will fail!

If you don't acknowledge that... well.. I'm afraid to say you gonna suffer.

Law 3: Why can my scraping function fail?

There are numerous reasons for scraping-functions to fail:

a CAPTCHA catches you red handed and diverts the page requests

your IP gets blocked

the page you get is different from the one you anticipate

the url doesn't exists

timeout

other random reason

Law 3: Consequences

Law 3: Consequences

A: You have to:

- find out where your scraping stopped☆

- try to debug ex post

- manually restart the loop where it last stopped, etc...

- in sum: pain, pain, pain, which could be avoided

Law 3: What to do?

anticipate failure by:

checking the pages you obtain: are they what you expect? Save the problematic pages. Stop as you go.

catching errors such as connection problems. Parse the error, and either continue or stop depending on the severity of the error.

catch higher level errors

Law 3: What to do?

anticipate failure by:

checking the pages you obtain: are they what you expect? Save the problematic pages. Stop as you go.

catching errors such as connection problems. Parse the error, and either continue or stop depending on the severity of the error.

catch higher level errors

rewrite the loop to make it start at the last problem

Application of the laws

save_path = "path/to/saved/webpages/"# SCRAPINGall_files = list.files(save_path, full.names = TRUE)i_start = length(all_files) + 1n = length(all_webpages)for(i in i_start:n){ status = scraper(all_webpages[i], save_path, prefix = paste0("id_", i, "_")) if(!identical(status, 1)){ stop("Error during the scraping, see 'status'.") } Sys.sleep(1.2)}# FORMATTINGall_pages = list.files(save_path, full.names = TRUE)n = length(all_pages)res = vector("list", n)for(i in 1:n){ res[[i]] = formatter(all_pages[i])}Law 1: Thou shalt not be in a hurry

save_path = "path/to/saved/webpages/"# SCRAPINGall_files = list.files(save_path, full.names = TRUE)i_start = length(all_files) + 1n = length(all_webpages)for(i in i_start:n){ status = scraper(all_webpages[i], save_path, prefix = paste0("id_", i, "_")) if(!identical(status, 1)){ stop("Error during the scraping, see 'status'.") } # wait Sys.sleep(1.2)}# FORMATTINGall_pages = list.files(save_path, full.names = TRUE)n = length(all_pages)res = vector("list", n)for(i in 1:n){ res[[i]] = formatter(all_pages[i])}Law 2: Thou shalt separate the scraping from the formatting

save_path = "path/to/saved/webpages/"# SCRAPINGall_files = list.files(save_path, full.names = TRUE)i_start = length(all_files) + 1n = length(all_webpages)for(i in i_start:n){ status = scraper(all_webpages[i], save_path, prefix = paste0("id_", i, "_")) if(!identical(status, 1)){ stop("Error during the scraping, see 'status'.") } Sys.sleep(1.2)}# FORMATTINGall_pages = list.files(save_path, full.names = TRUE)n = length(all_pages)res = vector("list", n)for(i in 1:n){ res[[i]] = formatter(all_pages[i])}Law 3: Thou shalt anticipate failure

save_path = "path/to/saved/webpages/"# SCRAPING# of course, I assume URLs are UNIQUE and ONLY web pages end up in the folderall_files = list.files(save_path, full.names = TRUE)i_start = length(all_files) + 1n = length(all_webpages)for(i in i_start:n){ # files are saved on disk with the scraper function # we give an appropriate prefix to the files to make it tidy status = scraper(all_webpages[i], save_path, prefix = paste0("id_", i, "_")) # (optional) the status variable returns 1 if everything is OK # otherwise it contains information helping to debug if(!identical(status, 1)){ stop("Error during the scraping, see 'status'.") } # wait Sys.sleep(1.2)}Law 3: Cont'd

# FORMATTINGall_pages = list.files(save_path, full.names = TRUE)n = length(all_pages)res = vector("list", n)for(i in 1:n){ # error handling can be looser here since the data formatting # is typically very fast. We can correct errors as we go. # If the formatting is slow, we can use the same procedure as for # the scraper, by saving the results on the hard drive as we advance. res[[i]] = formatter(all_pages[i])}Law 3: Still cont'd

scraper = function(url, save_path, prefix){ # simplified version page = try(read_html(url)) if(inherits(page, "try-error")){ return(page) } writeLines(page, paste0(save_path, prefix, url, ".html")) if(!is_page_content_ok(page)){ return(page) } return(1)}formatter = function(path){ # simplified version page = readLines(path) if(!is_page_format_ok(page)){ stop("Wrong formatting of the page. Revise code.") } extract_data(page)}save_path = "path/to/saved/webpages/"# SCRAPING# of course, I assume URLs are UNIQUE and ONLY web pages end up in the folderall_files = list.files(save_path, full.names = TRUE)i_start = length(all_files) + 1n = length(all_webpages)for(i in i_start:n){ # files are saved on disk with the scraper function # we give an appropriate prefix to the files to make it tidy status = scraper(all_webpages[i], save_path, prefix = paste0("id_", i, "_")) # (optional) the status variable returns 1 if everything is OK # otherwise it contains information helping to debug if(!identical(status, 1)){ stop("Error during the scraping, see 'status'.") } # wait Sys.sleep(1.2)}# FORMATTINGall_pages = list.files(save_path, full.names = TRUE)n = length(all_pages)res = vector("list", n)for(i in 1:n){ # error handling can be looser here since the data formatting # is typically very fast. We can correct errors as we go. # If the formatting is slow, we can use the same procedure as for # the scraper, by saving the results on the hard drive as we advance. res[[i]] = formatter(all_pages[i])}# Done. You may need some extra formatting still, but then it really depends# on the problem.]

Laws make your life easy

Making the code fool-proof and easy to adapt require some planning. But it's worth it!

Laws make your life easy

Making the code fool-proof and easy to adapt require some planning. But it's worth it!

If you follow the three laws of webscraping, you're ready to handle large projects in peace!

Dynamic web pages

Static vs Dynamic

- dynmstatic: HTML in source code = HTML in browser

Static vs Dynamic

dynmstatic: HTML in source code = HTML in browser

sttdynamic: HTML in source code ≠ HTML in browser

Static vs Dynamic

dynmstatic: HTML in source code = HTML in browser

sttdynamic: HTML in source code ≠ HTML in browser

Static vs Dynamic

dynmstatic: HTML in source code = HTML in browser

sttdynamic: HTML in source code ≠ HTML in browser

javascript

The language of the web

Web's trinity:

The language of the web

Web's trinity:

HTML for content

The language of the web

Web's trinity:

HTML for content

CSS for style

The language of the web

Web's trinity:

HTML for content

CSS for style

javascript for manipulation

What's JS?

A programming language

- javascript is a regular programming language with the typical package: conditions, loops, functions, classes

What's JS?

A programming language

- javascript is a regular programming language with the typical package: conditions, loops, functions, classes

Which...

- specializes in modifying HTML content

Why JS?

imagine you're the webmaster of an e-commerce website

if you had no javascript and a client searched "shirt" on your website, you'd have to manually create the results page in HTML.

Why JS?

imagine you're the webmaster of an e-commerce website

if you had no javascript and a client searched "shirt" on your website, you'd have to manually create the results page in HTML.

with javascript, you fetch the results from the query in a data base, and the HTML content is updated to fit the results of the query, with real time information

javascript is simply indispensable

JS: what's the connection to webscraping?

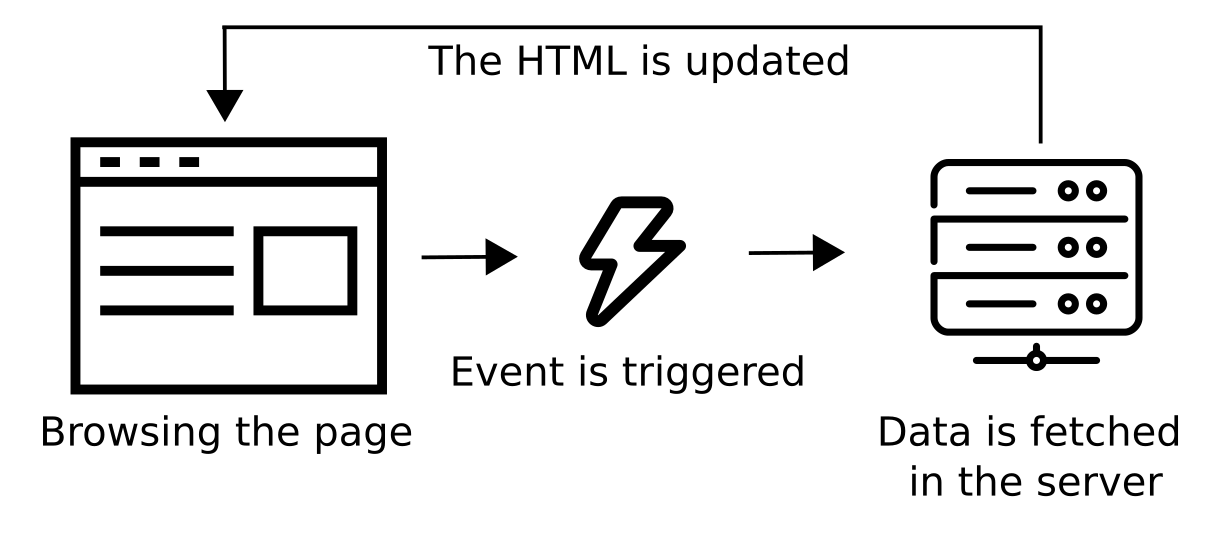

some webpages may decide to display some information only after some event has occurred

the event can be:

- the main HTML has loaded

- an HTML box becomes, or is close to become, on-screen e.g. think to facebook

- something is clicked

- etc!

JS: what's the connection to webscraping?

some webpages may decide to display some information only after some event has occurred

the event can be:

- the main HTML has loaded

- an HTML box becomes, or is close to become, on-screen e.g. think to facebook

- something is clicked

- etc!

JS: what's the connection to webscraping?

some webpages may decide to display some information only after some event has occurred

the event can be:

- the main HTML has loaded

- an HTML box becomes, or is close to become, on-screen e.g. think to facebook

- something is clicked

- etc!

JS: How does it work?

- you can add javascript in an HTML page with the

<script>tag

JS: How does it work?

- you can add javascript in an HTML page with the

<script>tag

Example:

<script> let all_p = document.querySelectorAll("p"); for(p of all_p) p.style.display = "none";</script>Use a CSS selector to select all paragraphs in the document.

<script> let all_p = document.querySelectorAll("p"); for(p of all_p) p.style.display = "none";</script>Remove all paragraphs from view.

<script> let all_p = document.querySelectorAll("p"); for(p of all_p) p.style.display = "none";</script>Back to our webpage

Let's go back to the webpage we have created in the previous course.

Add the following code:

<button type="button" id="btn"> What is my favourite author?</button><script> let btn = document.querySelector("#btn"); showAuthor = function(){ let p = document.createElement("p"); p.innerHTML = "My favourite author is Shakespeare"; this.replaceWith(p); } btn.addEventListener("click", showAuthor);</script>What happened?

- you can access a critical information only after an event was triggered here the click

What happened?

you can access a critical information only after an event was triggered here the click

that's how dynamic web pages work!

Dynamic webpages: Can we scrape them?

- yes, but...

Dynamic webpages: Can we scrape them?

- yes, but...

Dynamic webpages: Can we scrape them?

- yes, but...

Dynamic webpages: Can we scrape them?

- yes, but...

In other words...

We need a web browser.

Python + Selenium

Requirements

to scrape dynamic webpages, we'll use python in combination with selenium

you need:

- python 3.X + an IDE (e.g. pycharm)

- install selenium in python using

pip install seleniumon the terminal - download the appropriate driver to the browser (Chrome or Firefox) and put it on the path or in your WD

Checking the install

If the installation is all right, the following code should open a browser:

from selenium import webdriverdriver = webdriver.Chrome()How does selenium works?

selenium controls a browser: typically anything that you can do, it can do

most common actions include:

- access to URLs

- clicking on buttons

- typing/filling forms

- scrolling

- do you really do more than that?★

Selenium 101: An example

from selenium import webdriverfrom selenium.webdriver.common.by import Byfrom selenium.webdriver.common.keys import Keysdriver = webdriver.Chrome()driver.get("https://stackoverflow.com/")btn_cookies = driver.find_element(By.CSS_SELECTOR, "button.js-accept-cookies")btn_cookies.click()search_input = driver.find_element(By.CSS_SELECTOR, "input.s-input__search")search_input.send_keys("webscraping")search_input.send_keys(Keys.RETURN)Importing only the classes we'll use.

from selenium import webdriverfrom selenium.webdriver.common.by import Byfrom selenium.webdriver.common.keys import Keysdriver = webdriver.Chrome()driver.get("https://stackoverflow.com/")btn_cookies = driver.find_element(By.CSS_SELECTOR, "button.js-accept-cookies")btn_cookies.click()search_input = driver.find_element(By.CSS_SELECTOR, "input.s-input__search")search_input.send_keys("webscraping")search_input.send_keys(Keys.RETURN)Launching the browser empty at the moment.

from selenium import webdriverfrom selenium.webdriver.common.by import Byfrom selenium.webdriver.common.keys import Keysdriver = webdriver.Chrome()driver.get("https://stackoverflow.com/")btn_cookies = driver.find_element(By.CSS_SELECTOR, "button.js-accept-cookies")btn_cookies.click()search_input = driver.find_element(By.CSS_SELECTOR, "input.s-input__search")search_input.send_keys("webscraping")search_input.send_keys(Keys.RETURN)Accessing the stackoverflow☆ URL.

from selenium import webdriverfrom selenium.webdriver.common.by import Byfrom selenium.webdriver.common.keys import Keysdriver = webdriver.Chrome()driver.get("https://stackoverflow.com/")btn_cookies = driver.find_element(By.CSS_SELECTOR, "button.js-accept-cookies")btn_cookies.click()search_input = driver.find_element(By.CSS_SELECTOR, "input.s-input__search")search_input.send_keys("webscraping")search_input.send_keys(Keys.RETURN)find_element with find_elements (the s!). The former returns an HTML element while the latter returns an array.It's our first visit on the page, so cookies need to be agreed upon. After selecting★ the button to click with a CSS selector, we click on it with the click() method.

from selenium import webdriverfrom selenium.webdriver.common.by import Byfrom selenium.webdriver.common.keys import Keysdriver = webdriver.Chrome()driver.get("https://stackoverflow.com/")btn_cookies = driver.find_element(By.CSS_SELECTOR, "button.js-accept-cookies")btn_cookies.click()search_input = driver.find_element(By.CSS_SELECTOR, "input.s-input__search")search_input.send_keys("webscraping")search_input.send_keys(Keys.RETURN)Finally we search the SO posts containing the term webscraping. We first select the input element containing the search text. Then we type webscraping with the send_keys() method and end with pressing enter (Keys.RETURN).

from selenium import webdriverfrom selenium.webdriver.common.by import Byfrom selenium.webdriver.common.keys import Keysdriver = webdriver.Chrome()driver.get("https://stackoverflow.com/")btn_cookies = driver.find_element(By.CSS_SELECTOR, "button.js-accept-cookies")btn_cookies.click()search_input = driver.find_element(By.CSS_SELECTOR, "input.s-input__search")search_input.send_keys("webscraping")search_input.send_keys(Keys.RETURN)driver is only a generic name which was taken from the previous example. It could be anything else.Saving the results

To obtain the HTML of an element:

body = driver.find_element(By.CSS_SELECTOR, "body")body.get_attribute("innerHTML")The variable driver.find_element(By.CSS_SELECTOR, "body").get_attribute("innerHTML")☆ contains the HTML code as it is currently displayed in the browser. It has nothing to do with the source code!

Saving the results II

To write the HTML in a file, you can still do it Python way:

outFile = open(path_to_save, "w")outFile.write(html_to_save)outFile.close()Good to know

Scrolling (executes javascript)

# Scrolls down by 1000 pixelsdriver.execute_script("window.scrollBy(0,1000)")# Goes at the top of the pagedriver.execute_script("window.scrollTo(0, 0)")Dynamic webpages: is that it?

Well, that's it folks!

You just have to automate the browser and save the results.

Then you can do the data processing in your favorite language.

Practice

Go on the website of Peugeot.

- Scrape information on the price of the motors for a few models.

- Create a class extracting the information for one model.

- Run on the first 3 models.